This report from The Brookings Institution’s Artificial Intelligence and Emerging Technology (AIET) Initiative is part of “AI Governance,” a series that identifies key governance and norm issues related to AI and proposes policy remedies to address the complex challenges associated with emerging technologies.

Corporate leaders including Google CEO Sundar Pichai, Microsoft President Brad Smith, Tesla and SpaceX CEO Elon Musk, and IBM ex-CEO Ginni Rometty have called for increased regulation of artificial intelligence. So have politicians on the both sides of the aisle, as have respected scholars at academic research institutes and think tanks. At the root of the call to action is the understanding that, for all of its many benefits, AI also presents many risks. Concerns include biased algorithms, privacy violations, and the potential for injuries attributable to defective autonomous vehicle software. With the increasing adoption of AI-based solutions in areas such as criminal justice, health care, robotics, financial services, and education, there will be incentives that conflict corporate interests with societal benefits. That conflict raises the question of what systems should be put in place to mitigate potential harms.

The role of soft law

While the dialogue on how to responsibly foster a healthy AI ecosystem should certainly include regulation, that shouldn’t be the only tool in the toolbox. There should also be room for dialogue regarding the role of “soft law.” As Arizona State University law professor Gary Marchant has explained, soft law refers to frameworks that “set forth substantive expectations but are not directly enforceable by government, and include approaches such as professional guidelines, private standards, codes of conduct, and best practices.”

Soft law isn’t new. The authors of a 2018 article in the Colorado Technology Law Journal point out that uses of soft law go back decades. Examples they cite include the U.S. Green Building Council’s 1993 Leadership in Energy and Environmental Design (LEED) certification standards, the Food and Drug Administration’s longstanding practice of issuing non-binding guidance, and, in recent years, explanatory blog posts and tweets from agencies such as the Federal Trade Commission and Federal Communications Commission.

There are other examples as well. Bluetooth, the standard used for short-range wireless communication between pairs of devices like laptops and wireless speakers, was developed through a collaboration among technology companies. Wi-Fi, the set of wireless local area networking (LAN) standards that allows us to connect computers and many other devices to hotspots in homes and offices, was developed by the Institute of Electrical and Electronics Engineers (IEEE). The certification through the Wi-Fi Alliance, a related soft law framework, ensures that products labeled with the Wi-Fi Alliance logo have “met industry-agreed standards for interoperability, security, and a range of application specific protocols.”

“While soft law has been applied in many fields, there are multiple reasons why it is particularly well suited for AI.”

While soft law has been applied in many fields, there are multiple reasons why it is particularly well suited for AI. First, as Marchant writes, “the pace of development of AI far exceeds the capability of any traditional regulatory system to keep up.” Congressional legislation and agency administrative rulemaking operate on time scales of years. By contrast, enormous private and public sector investments have spurred rapid AI development. According to the National Venture Capital Association, “1,356 AI-related companies in the U.S. raised $18.457 billion” in 2019. A February 2020 report from the White House Office of Science and Technology Policy (OSTP) reported that, for the government’s 2020 fiscal year, “unclassified, non-defense Federal investments in AI R&D, total[ed] $973.5 million.” Research funded by the Department of Defense includes DARPA’s $2 billion “AI Next” campaign, which was announced in late 2018. With those levels of investment, the AI technology landscape is changing by the month. This rate of development is ill suited for time scales involved in administrative law, which often takes more than a year to go from a proposed rule to a final rule.

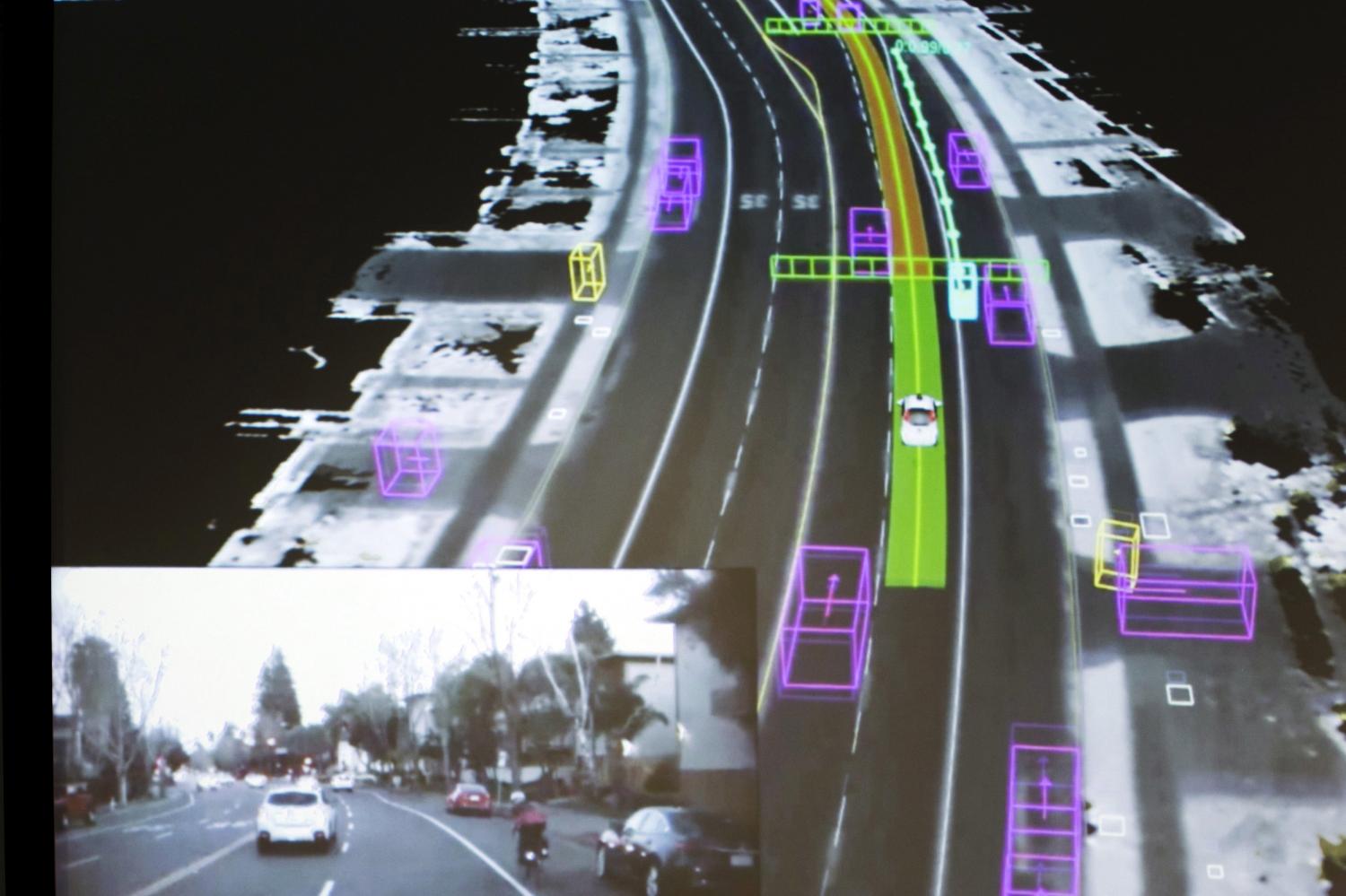

A second reason soft law is suitable for AI is the sheer complexity of the landscape. It would make no sense—financially, logistically, or in terms of staffing—to task one or more government agencies with the rulemaking and oversight extensive enough to cover all of the many applications and industries where AI will be used. For a small subset of applications, such as autonomous vehicles where the potential harms caused by failures are particularly acute, government regulation will play an important role. But most uses of AI aren’t nearly so high stakes. For those applications, soft law developed with input from companies, civil society groups, academic experts, and governments will be a key method to promote innovation consistent with ethical frameworks and principles.

Even for high-stakes applications, soft law can be an important complement to “hard” law. Consider the potential safety advantages enabled by information conveyed through vehicle-to-vehicle (V2V) communication, which can allow coordination among vehicles in close proximity in order to reduce the likelihood of accidents and increase the efficiency of traffic flow. Realizing the potential of V2V will require a combination of regulation from the Department of Transportation (e.g., to address core safety issues such as accident avoidance) and soft law (e.g., in relation to the processes and protocols for wirelessly exchanging information among vehicles).

AI soft law frameworks

Recent years have seen a growing number of AI soft law efforts. One category of AI soft law is principles, laying out high-level goals to guide the development and deployment of AI-based solutions. For instance, the Future of Life Institute has developed the Asilomar AI Principles, which address ethical and research funding issues in the context of AI.

Detailed models for AI governance constitute another form of soft law. Singapore’s Model AI Governance Framework, which was first released in early 2019 and updated in early 2020, is intended to serve as a “ready-to-use tool to enable organizations that are deploying AI solutions at scale to do so in a responsible manner.” It provides specific guidance in the four key areas of internal governance structures and measures, human involvement in AI-augmented decision-making, operations management, and stakeholder interaction and communication.

The increased attention to AI principles and governance approaches has also led to a growing number of resources to catalog and compare them. Algorithm Watch maintains an online AI Ethics Guidelines Global Inventory that, as of June 2020, listed over 150 frameworks and guidelines. An early 2020 publication from a group of researchers at the Harvard Berkman Klein Center provided a comparison of “the contents of thirty-six prominent AI principles documents.” And in a September 2019 article in Nature Machine Intelligence, Anna Jobin, Marcello Ienca, and Effy Vayena “mapped and analysed the current corpus of principles and guidelines on ethical AI.”

Standards, which tend to be highly detailed and technical, are yet another form of soft law. The International Organization for Standardization, which is a “non-governmental international organization with a membership of 164 national standards bodies,” is teaming with the International Electrotechnical Commission to develop a portfolio of AI-focused standards. IEEE is developing AI standards on topics including “transparency of autonomous systems,” “certification methodology addressing transparency, accountability and algorithmic bias,” and the impact of AI on human well-being.

Standards can take years to develop and thus do not always offer a speed advantage over hard law. However, in contrast with hard law, standards are usually created specifically with the intent of promoting innovation within an industry and can be updated relatively quickly as the technology develops. The Wi-Fi standards mentioned earlier, more formally known by the designation 802.11, provide a good example of this in the non-AI context. The IEEE successfully developed more advanced versions of 802.11 for decades as technology advances created opportunities to improve wireless communication speed and reliability.

While laws and regulations are limited by the jurisdiction of the government that enacted them, soft law in the form of international norms and standards can exert influence without raising extraterritoriality concerns. This broader context is particularly relevant for AI. As Peter Cihon of the Future of Humanity Institute at the University of Oxford explained in a 2019 publication, “[AI] presents novel policy challenges that require coordinated global responses. Standards, particularly those developed by existing international standards bodies, can support the global governance of AI development.”

Wendell Wallach and Gary Marchant have also noted the importance of approaching AI governance from an international perspective. In addition, they have observed that the proliferation of soft law approaches to AI can lead to a confusing and sometimes contradictory landscape. They also proposed the creation of an international governance coordinating committee that would “be situated outside government but would include participation by government representatives, industry, nongovernmental organizations, think tanks, and other stakeholders.”

Regulation and soft law for AI

One of the most important AI challenges will be to find the right balance between regulation and soft law. While in some circumstances regulation and soft law can be in conflict, they are more often complementary. Regulation is better suited to AI applications, such as autonomous vehicles, in which failures have a high human cost and product development occurs at time scales similar to or longer than those associated with congressional legislation and agency rulemaking. Regulation is less well-matched for uses of AI that are purely software-based. For example, consider AI-based solutions to address online disinformation. This is an area where the landscape changes too quickly for technology-specific regulation. Thus, in some AI domains, a better approach is to use regulation to address higher-level issues—such as the need for transparency—and allow soft law frameworks to be developed by experts who are much closer to the technology than regulators. For instance, representatives from companies, academia, and government can work together to establish standards surrounding specific uses of AI to address disinformation.

“One of the most important AI challenges will be to find the right balance between regulation and soft law.”

There can also be a dynamic, temporal relationship between soft law and hard law. Using hard law to lock in requirements for a particular technology can backfire when new technologies offering improved performance emerge soon thereafter. A better approach is letting soft law approaches guide the development of technologies. Once they are proven, the soft law can be used to shape hard-law frameworks. To take a non-AI example: In April 2020, the FCC, recognizing the potential speed increases made possible by the 802.11ax standard (often referred to by the marketing term “Wi-Fi 6”), issued an order opening up new spectrum for use by Wi-Fi devices. After a unanimous vote by FCC commissioners, FCC Chairman Ajit Pai said in a statement:

“In order to fully take advantage of the benefits of Wi-Fi 6, we need to make more mid-band spectrum available for unlicensed use. … So today, we take a bold step to increase the supply of unlicensed spectrum: we’re making the entire 6 GHz band—a massive 1,200 megahertz test bed for innovators and innovation—available for unlicensed use.”

With the increasing use of AI in nearly every aspect of the economy, companies, legislators, civil society groups, and consumers are rightly excited about its promise and concerned about its risks. In order for the AI ecosystem to reach its full potential, we will need a combination of thoughtfully designed regulation structured in a way to avoid rapid obsolescence, and agile soft-law frameworks that can help promote rapid and beneficial innovation among providers of AI solutions.

The author thanks Gary Marchant of the Arizona State University Sandra Day O’Connor College of Law for helpful feedback on this paper.

The Brookings Institution is a nonprofit organization devoted to independent research and policy solutions. Its mission is to conduct high-quality, independent research and, based on that research, to provide innovative, practical recommendations for policymakers and the public. The conclusions and recommendations of any Brookings publication are solely those of its author(s), and do not reflect the views of the Institution, its management, or its other scholars.

Microsoft provides support to The Brookings Institution’s Artificial Intelligence and Emerging Technology (AIET) Initiative, and Google and IBM provide general, unrestricted support to the Institution. The findings, interpretations, and conclusions in this report are not influenced by any donation. Brookings recognizes that the value it provides is in its absolute commitment to quality, independence, and impact. Activities supported by its donors reflect this commitment.